DoubleVerify’s Response to Adalytics’ March 28 GIVT Report

Key Takeaways from DV’s Response to Adalytics’ March 28 GIVT Report

-

The Report’s Core Claims Regarding DV Are Inaccurate – The report incorrectly suggests advertisers are billed for GIVT impressions and that pre-bid verification “doesn’t work”. In reality, GIVT is removed post-bid from billable counts—per MRC and TAG standards and DV pre-bid solutions remove both GIVT and SIVT (true “ad fraud”) at scale.

-

All 115 Examples Reviewed—DV Detected All Eligible Impressions – The DV Fraud Lab reviewed every impression cited and all eligible impressions were accurately identified and managed.

-

Methodology Relies Heavily on a Mischaracterized Bot – Most of the report’s examples and subsequent focus of news coverage involve URLScan, a non-declared, headless browser bot. Adalytics repeatedly misrepresents this as “declared bot” traffic, a mischaracterization according to URLScan’s own CEO. Additionally, URLScan is not on the IAB Spiders & Bots List.

-

Adalytics Conflates GIVT with SIVT – To exaggerate GIVT’s impact, Adalytics blurs GIVT with SIVT, two distinct categories of invalid traffic. This inflates perceived GIVT waste and misrepresents fraud detection effectiveness. GIVT and SIVT are standard, industry accepted definitions, as defined by the IAB, MRC, etc.

-

Verification Works Even When Pre-Bid Isn’t Active – DV accurately filters impressions post-bid when pre-bid filtering is not enabled or supported. This enables advertisers to not be charged for invalid traffic.

-

GIVT Detection by DV Is Accurate for Known Bots & Data Centers – DV’s recent testing confirmed 100% detection post-bid for all GIVT from Known Bots and Known Data Centers cited in the report.

-

Not All Served Ads Are Billable – Just because an ad appears doesn’t mean the advertiser paid for it. DV only includes valid impressions in billable counts, per industry standards—meaning invalid traffic is filtered appropriately, even if the ad renders.

-

Adalytics Misattributes Third-Party Code to DV – The report references DV “source code” 75 times, but it is actually referring to third-party DSP code–not DV’s.

-

DV Leads in Combating Real Ad Fraud (SIVT) – DV has uncovered major fraud schemes, worked with federal agencies, and was instrumental in supporting the Department of Justice and FBI in the industry’s only documented ad fraud conviction of Russian cybercriminal Aleksandr Zhukov. There is no public record of Adalytics having any comparable, action-oriented record of combating SIVT.

-

The “Mystery Bot” Raises More Questions – Adalytics won’t name the third bot in its study—making a true “peer review” impossible and further undermining the transparency of its findings.

-

Pre-Bid Solutions That Deliver Real Value – DV’s pre-bid solution doesn’t just filter IVT. Every day, DV analyzes billions of potential ad placements as part of a broader pre-bid protection framework that quietly delivers real, measurable value. DV’s pre-bid technology also powers more than 2,000 data segments across, not just fraud and invalid traffic, but also viewability, brand safety and suitability, contextual, and other pre-bid campaign settings, giving advertisers superior coverage, proven protection, and powerful performance across all devices and formats.

Response to Adalytics’ March 28 GIVT Report

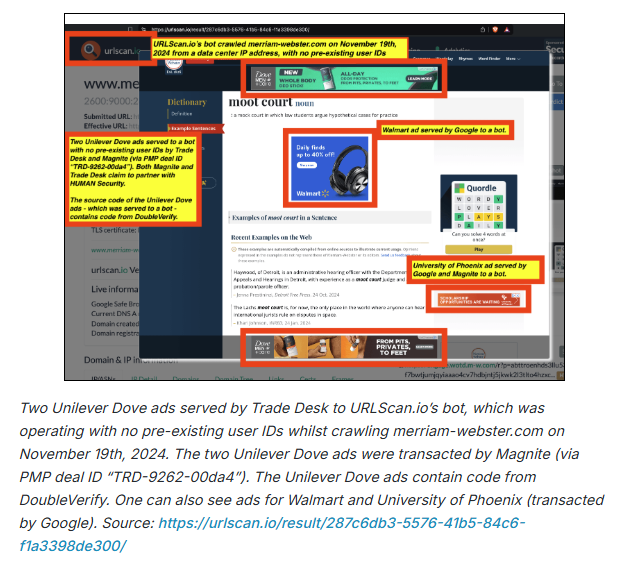

On March 28, 2025, Adalytics issued a report, covered by The Wall Street Journal, which misrepresented how General Invalid Traffic (GIVT) is handled by leading ad verification vendors, including DoubleVerify (DV), Integral Ad Science (IAS), and HUMAN. The report focuses on alleged discrepancies in GIVT detection and avoidance, and suggests “that advertisers were billed by ad tech vendors for ad impressions served to declared bots.”

Adalytics’ report is inaccurate and misleading, based on the incorrect premise that advertisers pay for GIVT. GIVT traffic—if not filtered out pre-bid—is removed post-bid from the set of billable impressions shared with advertisers. Additionally, DV’s substantial pre-bid SIVT capabilities are a core part of the value proposition that is ignored by the study. SIVT represents what is considered true “ad fraud” by the industry.

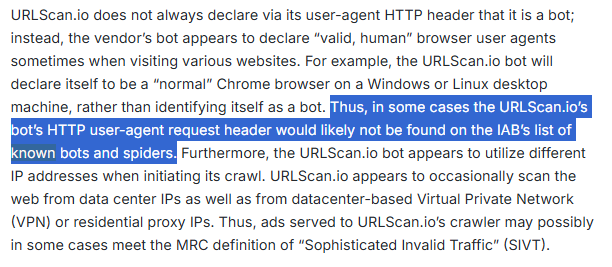

The report is also methodologically inaccurate as over 90% of the examples are based on URLScan, a bot that, by default, does not declare itself. According to URLScan’s CEO—who was contacted by a DV representative and asked, “Do you see [URLScan] as something that should be self-declared when interacting with websites or ad systems?”—he responded: “Our scanner does not announce itself, that would defeat the purpose of the tool.”

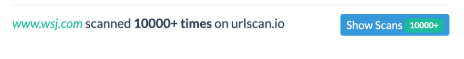

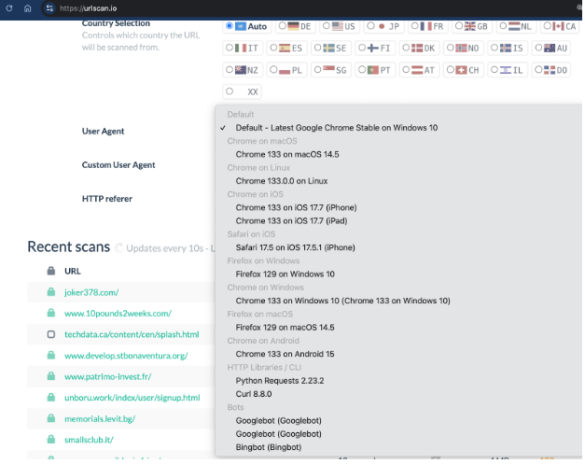

Accordingly, URLScan is not on the IAB Known Spiders & Bots List:

Methodologically, this immediately undermines Adalytics’ findings, which, again, suggest that “advertisers were billed by ad tech vendors for ad impressions served to declared bots.” To further emphasize Adalytics’ focus on declared bots, note that the company refers to its study as “the largest analysis of declared bot traffic in the context of digital advertising.”

However, at least one of the three bots in question—URLScan, which accounts for over 90% of the examples in the public report—is not declared.

Adalytics repeatedly obfuscates this point in its report:

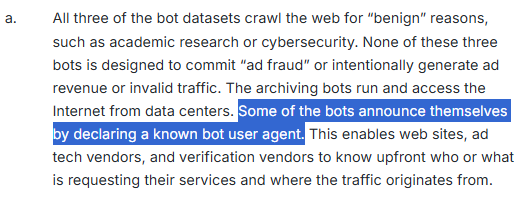

When referencing just three bots, Adalytics chooses to use vague language like “some” instead of stating more specifically exactly which of the three declare. (They do not name the third, so it is very possible that only one of three declares — more on that later.) Adalytics also has access to the IAB Spiders & Bots List, per the report, yet chooses not to explicitly share that URLScan is not on it. (DV ingests the IAB Spiders & Bots list as part of our standard process, but also asked the IAB Tech Lab to independently confirm that URLScan is not on the list—which they did—to further underscore this fact.)

When referencing just three bots, Adalytics chooses to use vague language like “some” instead of stating more specifically exactly which of the three declare. (They do not name the third, so it is very possible that only one of three declares — more on that later.) Adalytics also has access to the IAB Spiders & Bots List, per the report, yet chooses not to explicitly share that URLScan is not on it. (DV ingests the IAB Spiders & Bots list as part of our standard process, but also asked the IAB Tech Lab to independently confirm that URLScan is not on the list—which they did—to further underscore this fact.)

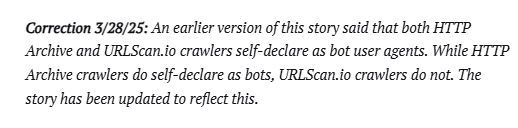

Unfortunately, errors like these create confusion. As an example, AdExchanger, which was briefed under embargo by Adalytics but chose not to speak to verification firms prior to publication, published inaccurate information in their coverage of the report writing, “URLScan.io crawlers typically self-identify.” They later issued a correction at the bottom of their article, once it became clear that URLScan is not a self-declaring bot.

Unfortunately, it’s difficult for AdExchanger to fully correct the error, as doing so undermines the report’s “declared bot” premise. See additional inaccurate claims here where, despite the correction at the bottom of the article, AdExchanger still suggests that URLScan appears on the IAB Known Bots & Spiders List, which it does not.

See this occur again in another AdExchanger piece about the report:

Because of the error in Adalytics’ methodology, The WSJ makes a similar mistake. It states that Adalytics’ report focuses on “cases when bots identified themselves as such, because they were used for benign purposes like archiving websites and detecting security threats.” Yet the article goes on to cite URLScan—despite the fact that, by default, URLScan does not declare itself. (To be clear, DV still overwhelmingly blocks URLScan pre-bid, even when it doesn’t declare itself, depending on the overall data and telemetry we have access to for each impression. See more in the URL Scan section of this article.)

After noting this to The WSJ, they added more context to the piece reflecting DV’s perspective, which can be found here. In addition, The WSJ published a patently false claim about DV, which was later corrected.

Part of a Pattern

This is part of a broader pattern with Adalytics’ reports, where errors and misleading information are often spread with little accountability.

An example of this is Adalytics’ report on user ID misdeclaration. In that report, Adalytics cites verification vendors, Moat and HUMAN, to fault them for not “catching” alleged ID misdeclaration, despite the fact that verification vendors do not collect IDs. This led to an article by AdExchanger in which they incorrectly fault verification, writing, “Where are the verifiers?” (Adalytics is currently in litigation over the claims made in this report.)

In instances where Adalytics has received critical questions about its reports, it has quietly edited or corrected the record post-publication. For example, in Adalytics’ first version of its Forbes report, it cites one vendor as being owned by a Chinese entity. The likely purpose is to frame the vendor negatively. However, this information appears to be inaccurate and Adalytics has seemingly removed it from the version currently on its website.

In another example, Adalytics published a piece titled “Major brands appear to be blocking ad targeting keywords,” in coordination with Check My Ads, about IAS. After it was revealed the findings were inaccurate, Adalytics issued a correction writing, “I’ve decided to remove any ambiguity and edited the original post to remove these brands.” Again, this is part of a broader pattern.

Another Example

Adalytics’ latest report again contains errors and misrepresentations, while creating harmful confusion across the market. Ultimately, the Adalytics reports are intended to promote and sell its own services while creating confusion and undermining the credibility of ad verification and other ad tech providers. There is no apparent transparent review process or any willingness to engage third parties about its methodology or findings.

The errors in Adalytics’ reports are compounded by their mischaracterizations of how ad verification works to the U.S. government—sharing incomplete information with federal agencies and presenting misstated claims to members of Congress seemingly in an attempt to provoke scrutiny of competitors. These efforts are often carried out in collaboration with Check My Ads, an organization that has repeatedly attacked ad tech companies with false and inaccurate claims.

DV supports all good-faith efforts to improve transparency and accountability in digital advertising and verification. Unfortunately, Adalytics’ latest report is designed to manufacture distrust and criticize effective solutions. As such, it’s important we correct the record.

The Facts

Here are the facts: DV has reviewed all 115 examples cited by Adalytics in its report that included alleged bot impressions and can confirm that every eligible1 impression was accurately detected by DV. See this table/overview, which we’ll break down at length in the following document.

This underscores the fact that Adalytics’ report misrepresents both the nature and volume of GIVT, as well as the trusted, widely adopted tools the industry relies on for filtering this traffic. The following analysis and review outlines the most serious flaws in Adalytics’ report.

1. “Eligible” refers to impressions for which DV retains full underlying data in accordance with client data retention policies.

Setting the Record Straight on Pre-Bid Protection and Value

Before we get into the analysis – one of the report’s most significant inaccuracies is the claim/implication that advertisers are paying for “fraud filtering solutions that don’t work.” This is simply wrong. Advertisers that use pre-bid solutions like DV’s not only are receiving protection against general invalid traffic (GIVT), but also are filtering out a substantial volume of sophisticated invalid traffic (SIVT)—which is far more complex and damaging. Moreover, fraud filtration is just one component of DV’s Authentic Brand Suitability (ABS) solution, which includes additional protections tied to context, suitability, and other critical media quality signals. In no instance is an advertiser paying for a filtering solution and not receiving value.

To be clear, pre-bid fraud detection delivers immense value across multiple vectors—not just GIVT avoidance. The report focuses narrowly on a fraction of impressions that fall into known GIVT and ignores the broader effectiveness of these solutions. Based on the billions of ad transactions we evaluate daily, less than 0.3% of impressions may involve GIVT that is not filtered pre-bid due to technical limitations (but are removed from billable counts post-bid). But that’s a small subset of a much larger protection framework that blocks billions of invalid impressions and supports advertisers in optimizing their media spend. This context must be clearly stated and understood.

In accordance with MRC and TAG standards, DV removes all identified GIVT from billable impression counts. DV’s data on GIVT is fully available to advertisers in DV’s GIVT Disclosures Reports, available in DV Pinnacle. In this process, DV’s data and pre- and post-bid filtration support advertiser transparency and help promote accurate billing across the ecosystem.

What Is GIVT and How Is it Managed?

The ad industry–in alignment with organizations like the MRC, IAB, and TAG–differentiates between GIVT (General Invalid Traffic) and SIVT (Sophisticated Invalid Traffic). GIVT is non-malicious IVT, such as search engine crawlers like GoogleBot, that can be identified using routine means of filtration. Alternatively, SIVT is more deceptive and involves fraudulent behavior, like ad stacking or domain spoofing. (For more background, see this DV GIVT blog.)

GIVT can be managed either before (pre-bid) or after (post-bid) the bidding process. Pre-bid allows advertisers to block GIVT before an ad is served, while post-bid identifies IVT after delivery, automatically removing invalidated impressions from the billable counts. Both approaches are accepted under industry standards established by the MRC and TAG.

In fact, the MRC requires only post-bid measurement and filtration for compliance. According to the MRC IVT Guidelines, “back-end detection and filtration techniques are required for compliance with the Standard. Digital measurement organizations employing up-front IVT filtration techniques must do so in combination with required back-end detection and filtration techniques.”

Similarly, TAG (Trustworthy Accountability Group) states that filtering can occur either pre-bid or post-bid, as long as monetizable transactions are filtered to remove bot impressions. In its Certified Against Fraud guidelines, TAG repeats the following phrase: “removal pre-bid or post-bid as long as all of the monetizable transactions (including impressions, clicks, conversions, etc.) that it handles are filtered.”

Adalytics is not accredited by MRC, TAG, or any industry-recognized third-party, as this requires rigorous transparency that Adalytics may not be willing to undergo. Instead, the Adalytics report mischaracterizes the MRC and TAG guidelines to support its conclusions. Note this example from their report:

Adalytics’ use of the phrase “bot filtration” is meant to suggest pre-bid filtration is the necessary standard for certifications when it’s not.

Adalytics’ use of the phrase “bot filtration” is meant to suggest pre-bid filtration is the necessary standard for certifications when it’s not.

The MRC IVT Guidelines are a comprehensive and thoughtful framework designed to help the industry mitigate harmful traffic while still accounting for the legitimate functions GIVT may serve. The Adalytics report misrepresents long-established standards, while also distorting how IVT should be handled to downplay its own lack of any industry accreditation or certification. Brands should expect all of their analytics partners to meet transparent, established industry standards, and if they don’t, ask them why they do not.

DV is fully compliant with the MRC IVT Guidelines, which require post-bid filtration and the removal of GIVT from reporting and monetized impression counts. As a result, DV is accredited by the MRC for pre-bid IVT detection across all integration partners, as well as for post-bid GIVT and SIVT detection and measurement across desktop, mobile web, mobile apps, and CTV. DV has also earned the TAG Certified Against Fraud seal every year since the program’s inception. DV’s data on GIVT is fully available to advertisers in DV’s GIVT Disclosures Reports, available in DV Pinnacle.

The Report Conflates GIVT with SIVT

Our data shows the overall average of programmatic GIVT rates from declared bots and known data centers remains well below 1%, both on platforms that support DV’s pre-bid GIVT filtering and those that do not. This is because GIVT impressions are relatively straightforward to identify and remove using standard industry resources, such as known spiders and bots lists or known data center IP registries.

As declared GIVT can be addressed through platform-level registries, the real value that DV provides—and what clients really rely on us for—is pre-bid detection of more complex “benign” spiders and bots (like URLScan – more on that later) and harmful SIVT that registries can’t catch.

DV also employs its own GIVT analysis techniques and methods to ensure coverage and protection across all areas of GIVT outlined in the IVT guidelines. Additionally, DV offers independent, cross-platform protection for GIVT, rather than relying on the variable protocols of individual platforms, which is why many advertisers choose to use our technology for this purpose.

For advertisers that prefer to use DV for GIVT avoidance at the pre-bid level, our filtration has been shown to be both accurate and effective. When DV pre-bid avoidance is enabled, GIVT from known (self-declared) bots drops from below 1% to below 0.03% of programmatic impressions. Even then, DV identifies these bots post-bid in virtually all cases. This represents a 75% to 98% reduction—depending on client settings—compared with unfiltered programmatic impressions, which proves that GIVT pre-bid avoidance is highly effective, especially at reducing post-bid reconciliation. This demonstrates that the vast majority of GIVT is effectively filtered out, and that current standards and technologies are doing their job.

However, this poses a challenge to Adalytics’ “rampant GIVT waste” narrative. To sustain that narrative, the report conflates GIVT with SIVT, dramatically exaggerating the scale of GIVT “waste” (i.e., GIVT impressions advertisers allegedly pay for). By manufacturing a problem, Adalytics creates an opening to discredit the very solutions—like verification—that are designed to solve it.

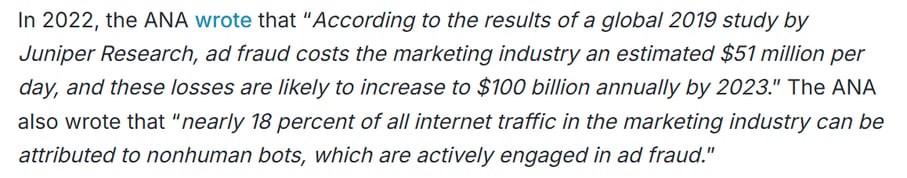

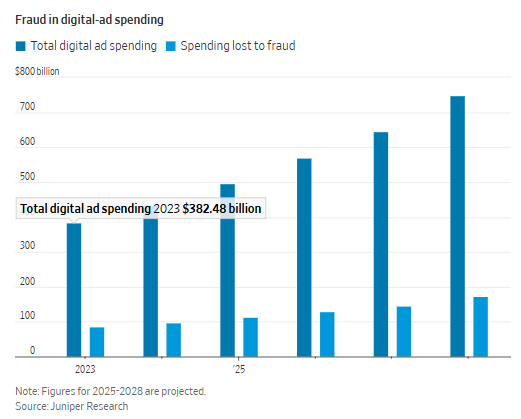

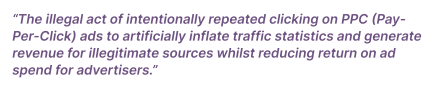

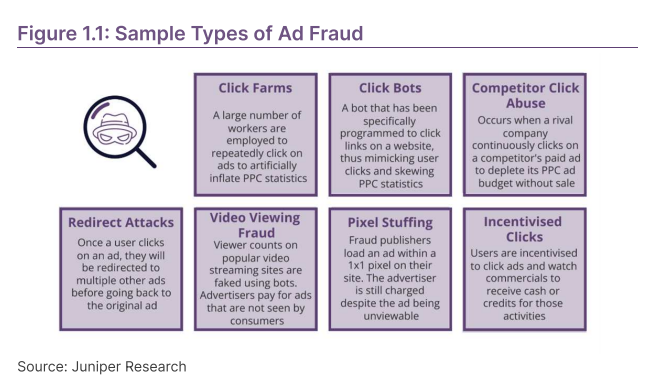

To that end, Adalytics cites Juniper Research in its report:

Juniper’s is also cited in The WSJ’s coverage of Adalytics’ report:

However, when reviewing the actual source material, Juniper clarifies that its definition refers to SIVT fraud, not GIVT waste. See here:

Juniper also describes the various forms of ad fraud its research and projections cover:

Adalytics’ conflation here (amplified by The WSJ), again, is misleading.

Similarly, Adalytics uses the word “fraud” 128 times in its report, even though it later clarifies that none of the bots cited were “actively seeking to commit ad fraud.” (The term “waste,” which is more fact-based, is used twice.) As a result, several outlets have referred to Adalytics’ report as a report on “ad fraud.”

Check My Ads, which collaborated with Adalytics on this research, also issued a press release claiming the report, which focused on three so-called GIVT bots, exposed “pervasive fraud within the digital advertising industry.” The release states “digital advertising fraud accounts for approximately $84 billion annually” without citation—but a quick search reveals it too originates from Juniper, and once again refers to SIVT, not GIVT.

This conflates IVT types to support a predetermined narrative and denigrate verification services that actually work to reduce the billion dollar SIVT totals being cited.

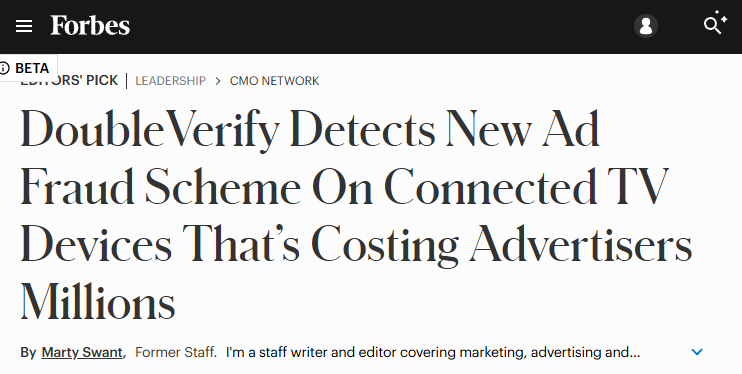

In fact, DV solutions are predominantly used to combat SIVT. Last year, for example, DV uncovered a network of fraudulent white noise apps, some of which were designed for use with young children. Each of these apps siphoned, at minimum, $225,000 per month — and millions annually — from unprotected advertisers. DV’s work publicly revealing the network prompted the fraudsters to change their operation overnight.

DV identifies over 2 million bot-infected and malware devices daily, and has detected major ad fraud schemes that have saved clients millions of dollars and made these schemes public to better the industry. See more details on some of these schemes in publications like Forbes, WIRED, and FORTUNE.

Additionally, we have worked closely with government agencies and regulators to dismantle SIVT fraud operations that steal millions of dollars in ad spend from advertisers of all sizes. DV played a critical role in the U.S. Government’s 2021 conviction of Russian cybercriminal Aleksandr Zhukov, the self-proclaimed “King of Fraud,” who stole millions from U.S. advertisers. DV’s evidence, along with testimony from our CTO, was instrumental in securing his conviction and prison sentence. This effort, carried out in coordination with the Department of Justice and the FBI, is one of the only successful convictions of a digital ad fraudster in history.

This raises a broader question about Adalytics’ capabilities: to our knowledge, to date, the company has not demonstrated any ability to detect SIVT. It has never publicly disclosed a fraud scheme or published any SIVT research recognized by the broader ad fraud community.

On the blurring of GIVT and SIVT in Adalytics’ report—this is not a matter of semantics. Misrepresenting a limited, well-managed issue like GIVT as a broader industry failure does nothing to protect advertisers. Instead, it leads to incorrect conclusions and unnecessary confusion, while failing to meaningfully address SIVT fraud.

Worse, if the report convinces advertisers they don’t need robust protection, it puts their campaigns at greater risk for both GIVT and SIVT—ultimately increasing waste. It also undermines monetization for legitimate publishers, who may now be asked to block GIVT bots via robots.txt files, despite the fact that many of these bots serve important functions such as SEO indexing and security analysis.

The Reality of Adalytics’ Alleged Examples and Inaccurate Claims

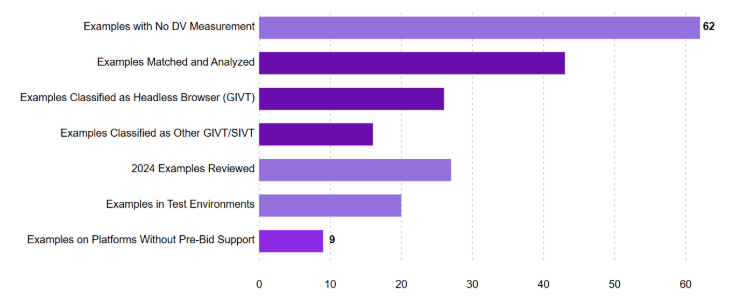

In its report, Adalytics cites 115 examples intended to suggest shortcomings in bot detection. However, a detailed forensic review by the DV Fraud Lab reveals that the report’s claims are deeply flawed—both methodologically and factually.

No Evidence of DV Measurement in Over Half of the Examples

Out of the 115 total examples, 62 (54%) lacked DV tags, signals, or events—meaning DV’s verification was never in place. As such, these cannot credibly be used to evaluate the effectiveness of DV’s technology.

Of the eligible2 impressions in the remaining examples, all were accurately identified and appropriately managed by DV. The following is a breakdown of that analysis.

2. There were 37 impressions in 10 examples that were ineligible for review because they fell outside DV’s established data retention period, which aligns with industry standards and client data privacy requirements.

43 Examples Validated and Correctly Classified

DV was able to match impressions from 43 of the examples to historical measurement records. In every case, DV accurately identified and invalidated the impression post-bid. Nearly 80% were correctly classified as “GIVT – Headless Browser,” the appropriate classification for traffic associated with URLScan crawlers. The remaining impressions were all tagged under other GIVT or SIVT categories—primarily using IP signals from either GIVT Data Centers or SIVT Data Centers—reflecting the varied behaviors exhibited by headless browser traffic. These findings demonstrate the precision and reliability of DV’s fraud detection systems. (See DV’s blog post on URLScan detection for more detail.)

2024 Examples: Pre-Bid Avoidance Was Not Applicable

DV also reviewed the 27 examples that Adalytics cited from 2024. The 27 examples were matched to impressions delivered under conditions that intentionally, but not maliciously, suppress or disable pre-bid filtering—such as staging environments, test client codes, or campaigns that had pre-bid Quality Targeting explicitly turned off. These configurations are typically chosen by advertisers or publishers and do not represent real-world ad-buying conditions. Even without pre-bid in effect, these impressions were/are invalidated post-bid, per industry standards.

Use of Test Environments and Non-Standard Configurations

20 of the examples included impressions that occurred within non-production test environments. These include QA campaigns and/or staging phases—environments where pre-bid avoidance is not enabled by default or is intentionally disabled for operational reasons. Adalytics’ inclusion of these impressions reflects a misunderstanding of how verification is configured and measured in test vs. live environments. Still, these impressions were/are filtered post-bid, per industry standards.

Why pre-bid avoidance might not be activated: to clarify, turning off pre-bid avoidance and/or blocking is a legitimate decision for brands, especially in the context of GIVT. Crawlers, emulators, scrapers, etc. can be a powerful tool for creative auditing and various tests, which advertisers may wish to see completed, then monitor in post-bid. It is industry standard—and even recommended per the MRC Invalid Traffic Guidelines (see above)—to allow such ads to serve and then filter GIVT from monetizable impression counts. DV supports this approach through post-bid reporting, offering full transparency and flexibility to its clients.

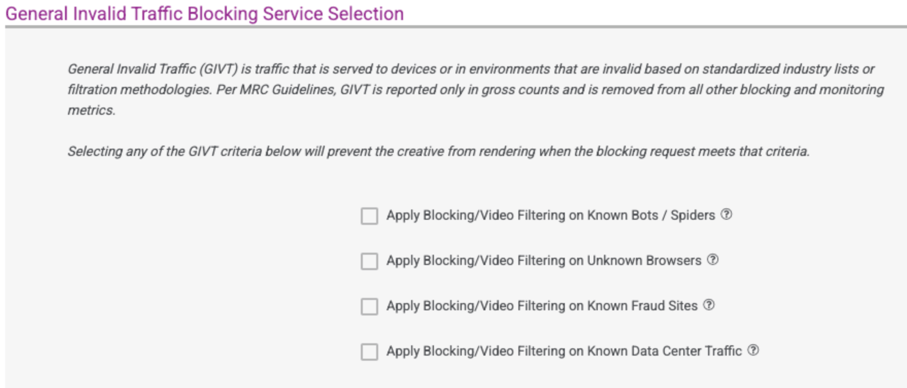

Clients who seek pre-bid filtration of GIVT, can turn on avoidance/blocking as they see fit:

If Pre-Bid is Not Supported, Post-Bid is Enabled

Finally, in 9 of the examples, the impressions were served on platforms where user-agent bot avoidance was either not supported or not activated. For the latter, the source code referenced is not DV’s, and does not reflect the effectiveness of our pre-bid solution (in at least one instance, DV saw that the third-party code indicated pre-bid was turned on when it was not yet activated by the advertiser). Still, in every instance cited, DV accurately identified the impressions, enabling post-bid removal from billable impression counts, in accordance with industry standards.

Accurate Detection Across Known Bots & Data Centers

Last but not least, the DV Fraud Lab confirmed that our GIVT detection systems perform exactly as intended—not just on the selectively curated bot impression examples highlighted by Adalytics. Following the report, DV conducted extensive testing and 100% of GIVT stemming from Known Bots was accurately detected post-bid. This includes PTST user agents, which are commonly used by automated crawlers, archivers, and speed-testing services—such as HTTP Archive Bot, which is specifically cited by Adalytics. In accordance with industry standards and MRC guidelines, all GIVT types are excluded from billable impression counts. Similarly, DV confirmed through our recent testing that 100% of Known Data Center traffic was accurately identified in post-bid.

It’s important to note that not every known bot can or should be blocked pre-bid—some are only detectable or relevant after rendering, which is why post-bid filtration is a critical part of industry best practices. In accordance with industry standards and MRC guidelines, all GIVT types—whether caught pre- or post-bid—are excluded from billable impression counts.

Clarifying on User Agents

As we have previously stated, “some media-buying platforms may not currently support pre-bid avoidance of GIVT.” If a platform lacks user-agent lookup functionality or does not prioritize it, bot detection and filtration—at the pre-bid and post-bid level—still occurs, using a range of other inputs, including ADIDs (Advertising IDs) and IP addresses. DV’s solution relies on hundreds of signals and data points to detect IVT, across multiple layers of protection, well beyond the user-agent alone. Additionally, these signals are used together, so user-agent bots can be filtered pre-bid if they are also associated with an evasive or invalid IP address, or other relevant input.

For example, for a leading DSP without user-agent lookup functionality, DV’s pre-bid GIVT avoidance consistently achieves a success rate of over 99%—demonstrating that effective protection is enabled through signal diversity. Even so, if user-agent-based pre-bid is not supported, DV applies post-bid detection, with those impressions not counting toward the advertiser’s billable total. This, again, is aligned to industry standards.

In our conversations with The WSJ about their coverage of Adalytics’ report prior to publication, they shared two URLScan user agent impressions provided by Adalytics, which are as follows:

In both cases, we confirmed the impressions were correctly identified by DV and managed appropriately, per industry standards. Note that these impressions are no longer in Adalytics’ final report seemingly because they were credibly refuted and do not align narratively with the facts.

Unfortunately, these misrepresentations have led to confusion in the coverage of the report, per AdExchanger:

It’s important to clarify that the suggestion that “pre-bid doesn’t work” is inaccurate and misrepresents how pre-bid protection functions. DV’s pre-bid solutions not only filter GIVT, but also large volumes of the much harder to identify SIVT simultaneously, while also delivering broader media quality protections. This makes the value of pre-bid protection far greater than the narrow framing suggested in the AdExchanger article or in Adalytics’ report.

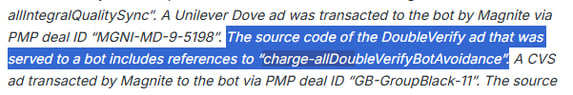

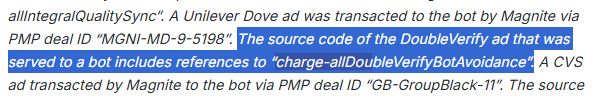

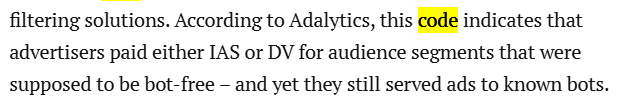

Clarifying on “DV Code”

Adalytics’ reports often misattribute third-party DSP code as belonging to DV. This issue appears again in the latest report, where “charge-allDoubleVerifyBotAvoidance” is referred to as “source code” from DoubleVerify 75 times. See example here:

As we made clear to The WSJ, which was in direct contact with Adalytics in advance of their report’s publication, this is not DV code.

Why does this matter? In at least one of the impressions cited by Adalytics, which included the code “charge-allDoubleVerifyBotAvoidance,” we confirmed that the advertiser had not even enabled DV for pre-bid filtration, demonstrating how unreliable and misleading this metadata interpretation is. Note that we also confirmed the advertiser was not inadvertently charged for pre-bid filtration, as the feature was not enabled on their side. Ultimately, DV does not control the public code that third-party platforms choose to implement.

Importantly, Adalytics did not verify its assumptions with DV before presenting them as fact. As a result, multiple stories have repeated the DV “source code” error, incorrectly attributing it as DV-owned and then drawing inaccurate, broad generalizations based on that error.

According to AdExchanger, for example:

Additionally, it’s worth noting that every example in the Adalytics report referencing DV “source code” or “code,” involves URLScan, a bot that does not self-declare. Once again, the reliance on URLScan in the methodology leads to highly misleading conclusions.

More on URLScan

As we have outlined, Adalytics misrepresents URLScan in its analysis in an effort to position a more evasive, non-declaring bot, as a self-declaring bot. This is intended to present verification as inept. According to a reporter from The WSJ, for example, who reached out to DV regarding the report on January 29, 2025: Adalytics’ “report suggests the companies lack the competence to handle the most basic of detection tasks and raises the question of whether they can handle more sophisticated cases.”

For clarity, URLScan is a web analysis tool that security researchers, developers, and analysts use to examine website behavior, analyze headers, and review third-party calls. It’s a tool for analysis, and is not inherently malicious.

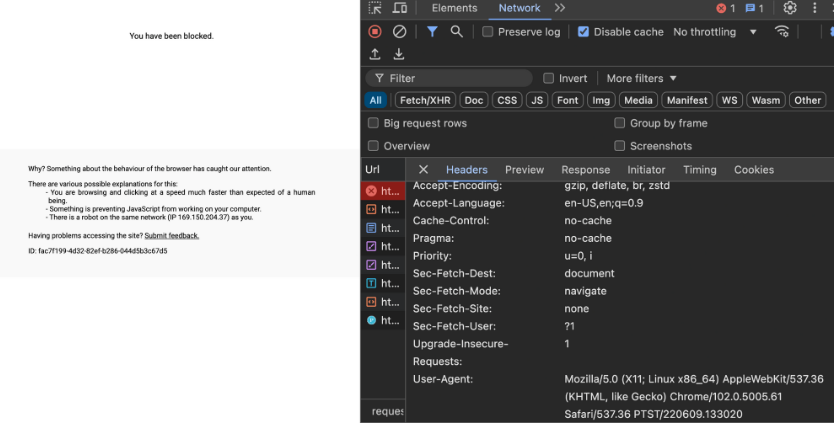

Users can configure URLScan to crawl websites using a variety of user-agents. However, by default, URLScan uses “Latest Google Chrome” as its user-agent, which makes it more evasive by design. In this default setting, URLScan does not self-declare itself.

In reviewing the URLScan examples cited in Adalytics’ report, all of them, according to publicly available data, are undeclared. Yet, this is not disclosed by Adalytics.

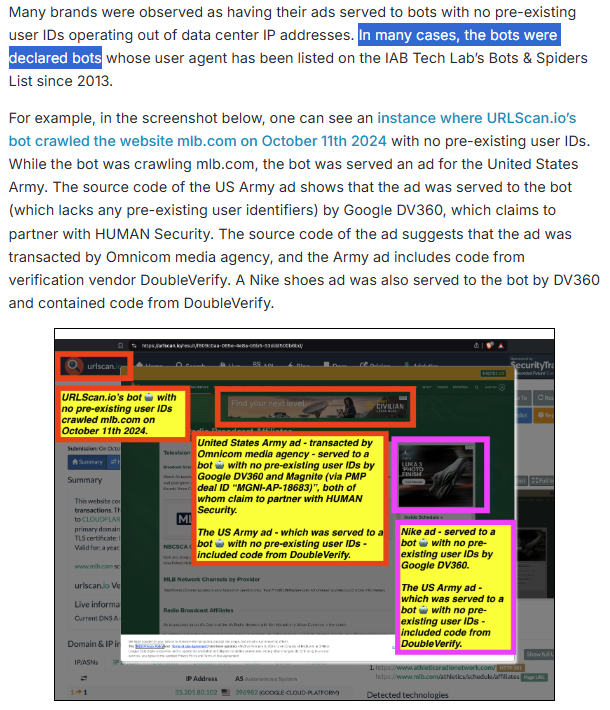

Consider this notable excerpt from the report: “In many cases, the bots were declared bots whose user agent has been listed on the IAB Tech Lab’s Bots & Spiders List since 2013.” Adalytics then presents an example involving an mlb.com crawl by URLScan:

However, that example does not support the claim. The user-agent shown—“Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/129.0.0.0 Safari/537.36”—is not declared. URLScan, in this instance, is not self-identifying, and neither the user-agent nor the tool itself are on the IAB Spiders & Bots List.

Adding to its complexity, URLScan uses headless browser technology to fully render pages, including scripts, tracking pixels, and ad calls, without a visible interface. A headless browser operates like a standard browser but lacks a graphical user interface (GUI). This allows automated systems to interact with web content—executing JavaScript, rendering images, and triggering ad requests—essentially mimicking the behavior of human viewers. URLScan can even solve CAPTCHAs.

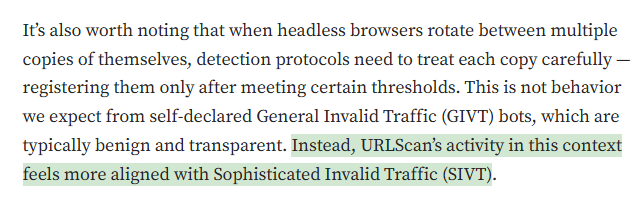

The DV Fraud Lab accurately detects headless browser activity and reports it according to its context. Benign usage of headless browsers is flagged as GIVT, while more evasive headless browsers (paired with other signals for manipulation or evasion) can be reported as SIVT. In most cases, since the vast majority of URLScan crawls do not opt to self-declare, we often identify it as SIVT.

For all of these reasons, URLScan is not easy to detect. According to bot research expert Antoine Vastel, headless browser bots like URLScan are “increasingly” difficult to identify due to their sophisticated evasion tactics. According to Vastel, in fact, URLScan more closely resembles SIVT than GIVT:

With that said, of the traffic DV analyzes, headless browser GIVT impressions represent a fraction of a percent and are effectively identified pre-bid (if permitted) and post-bid (if not permitted pre-bid), removing the resulting impressions from billable counts shared with customers through DV’s GIVT disclosure reporting.

Note that Adalytics repeatedly references headless browsers in its research without appearing to understand what the term means or how it affects detection, classification, and management.

This confusion is evident in the same mlb.com example cited earlier, where Adalytics claims an ad was served to a declared bot. In reality, the user-agent was undeclared and associated with a headless browser. DV detected the impression accurately and removed it post-bid from billable counts, as the client did not have pre-bid avoidance turned on.

Here are three critical questions you should ask your verification partner about detecting headless browser traffic:

- How does your verification partner’s technology reliably detect headless browsers?

- Can they explain the difference between declared bots and evasive headless browser traffic—and how their system distinguishes between the two?

- If your verification partner lacks pre-bid integration, how does it prevent ads from being served to headless browser environments in real time?

Additionally, URLScan activity often leverages an advanced headless browser plugin known as Puppeteer Extra Stealth, an enhancement recommended in public resources used by professional web scrapers and crawlers, enabling it to be more evasive. Despite this, DV accurately detects and manages this traffic.

It’s worth reiterating that URLScan is not listed on standard “known bots” registries, like IAB Spiders & Bots, and requires more advanced technical capabilities to identify and manage effectively. Adalytics even vaguely touches on this in its report, possibly realizing too late that the tool’s behavior doesn’t support a “declared bot” narrative.

The opaque language, however, is confusing and leads to inaccurate representations in the report’s coverage. See this from AdExchanger:

In reality, URLScan typically operates using manipulated user-agent strings. For example, it often uses “Mozilla/5.0 (Windows NT 10.0; Win64; x64)” instead of including the term “urlscan” in the user-agent. This is misleading because that specific string is the fifth most popular user-agent globally, shared by many legitimate human users. As such, it would not appear on the IAB list, which only includes user-agent strings that clearly identify known bots (e.g., “Googlebot”).

In an effort to strengthen the “declared” and “known” misrepresentation, the report also suggests URLScan activity should still/always be captured pre-bid because it allegedly operates from known data centers. However, in the very small subset of public examples in the report, the DV Fraud Lab confirmed that those associated IPs were not on the Known Data Center list at the time the impressions were served. In these cases, depending on a campaign’s settings and the media-buying platform, post-bid detection applies—per industry standards—not pre-bid, and the impressions are accurately invalidated and removed from billable counts.

DV’s innovative work on headless browser detection has received attention from the professional fraud-fighting community, with the DV Fraud Lab delivering public talks to share its detection technology for the benefit of the ad industry and beyond. See DV’s 2022 presentation on headless browser detection, as an example.

To this point, DV’s technology regularly and effectively avoids or blocks URLScan impressions pre-bid. The screenshot below shows a typical example of DV accurately identifying URLScan activity on www.wsj.com and successfully applying avoidance and blocking measures. (Even if the impression is not pre-bid avoided, again, we will remove it from billable counts post-bid.)

Example of urlscan.io activity on WSJ.com:

Thanks to DV’s blocking, the VAST returns an empty response, so that the ad isn’t played:

Now compare that to one of the publishers (who we will not identify) praised in Adalytics’ report for “appearing to block” all declared GIVT traffic. Adalytics spotlights this publisher for the successful pre-bid blocking of one of the bots used in its study, HTTP Archive.

However, when analyzing public URLScan data from the same time period of Adalytics’ study, it’s clear that this same publisher does not block URLScan pre-bid, because URLScan does not declare itself as a bot, is not on the IAB’s list, and is generally more evasive. See this example (we have obscured the advertisers).

In other words, Adalytics highlights one bot being blocked while ignoring that the other bot it relies on in its own study—which is more evasive—is not blocked.

As we’ve stated before, and evidenced in this case, these appear to be manipulated findings. Results are selectively showcased that support a premise while excluding counterexamples that would undermine it. This creates confusion, suggesting that blocking all bots in the study pre-bid is straightforward and universally supported, which it’s not. And once again, even if URLScan is not identified pre-bid, that does not mean the publisher failed to identify and exclude it post-bid from billable counts. (To be clear, publishers are not doing anything wrong here.)

To further support the community and provide more education on URLScan, DV has also published this blog post by Gilit Saporta, VP of Product Management, Fraud & Quality at DoubleVerify.

A Mystery Bot?

Given Adalytics’ misuse of URLScan, DV is formally requesting that they publicly disclose the details about the unnamed “mystery bot” referenced in their research. See this section of their report, which seems to indicate that this bot, like URLScan, might also be undeclared.

Note this language, specifically:

In a sense, Adalytics is likely relying on two bots—out of three, in total—that are likely undeclared (“does not declare”), not on “known bots” lists (“would likely not be located on the IAB’s list of known bots and spiders”), and that might actually be SIVT, to make its claims.

This has led to wildly inaccurate headlines by publications like AdExchanger, most notably:

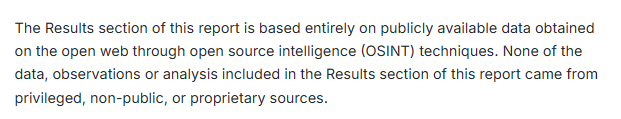

The use of an “anonymous web crawler vendor” also invalidates the following claims by Adalytics, who states that their report “is based entirely on publicly available data.”

If the third bot is not named, this claim is obviously false, as the information is “privileged, non-public” and “proprietary.” Adalytics has previously stated that it relies on “peer-review” of its work. Note this example in relation to its ID misdeclaration report:

Without public disclosure of the bot, there is no opportunity for third parties to conduct a transparent review or replicate the analysis in full.

Questionable Peer Review

Notably, in a blog post last year, Shailin Dhar—a fraud expert cited by Adalytics 12 times in its GIVT report as “an ad tech industry expert,” through previously public statements—had himself criticized Adalytics’ peer-review process.

Specifically, he questioned whether the individuals involved were “qualified to review research of bidstream mechanics and what parameters facilitate auctions,” and went on to suggest that the Adalytics peer review process was likely “a conflict of interest.”

In the same blog post, Dhar cites Adalytics’ ID misdeclaration report as “a hit-piece masked as a research report,” and says Adalytics should “just retract the report and move on.”

Dhar, again, cited 12 times as an “adtech industry expert” in Adalytics’ own report, goes on to say Adalytics doesn’t “really understand ‘how’ ad fraud is committed or activated.”

Relatedly, just days ago, Dhar—speaking again about Adalytics’ ID misdeclaration report—notes: “Adalytics screwed up. They should just own it” and “apologize.”

Adalytics’ History of Misleading Claims

Unfortunately, beyond the above, there is a track record of Adalytics misrepresenting verification technology and data, and drawing false conclusions. Below are additional examples DV has had to correct the record on either publicly or behind the scenes with partners and clients:

-

MFA Reports – In 2023-2024, Adalytics claimed DV failed to block MFA (Made for Advertising) sites. However, DV had already properly classified the sites in Adalytics’ report before these reports were published.

-

DV Tag ≠ Advertiser Settings – In multiple brand safety reports, Adalytics has wrongly assumed that the appearance of DV tags means avoidance settings are active. This is inaccurate.

-

DSP Code ≠ Advertiser Settings – Similarly, Adalytics has wrongly assumed that the appearance of DSP verification code means avoidance settings are active and buyers are paying. This is also inaccurate.

-

Forbes Situation – Adalytics alleged DV failed to flag a Forbes subdomain as MFA. In fact, DV had already classified the domain correctly and reported on it accurately. The MRC has independently audited and confirmed this.

Unfortunately, as we have seen in this analysis, many of these claims are then repeated in media coverage, with limited opportunity to correct the record before publication.

IVT Questions to Ask Partners

Before working with any vendor to protect campaigns from IVT, advertisers should ask the following questions to ensure partners are credible and capable of protecting campaigns from GIVT and SIVT:

-

Are you accredited by any independent third-party organization to measure, detect, or report on invalid traffic (IVT)?

-

Have your methods been reviewed by recognized experts in ad fraud or bot detection?

-

How do you detect and manage headless browser bots or other advanced forms of GIVT without relying on delayed or manual “clawback” processes through my DSP?

-

What protections do you offer against sophisticated invalid traffic (SIVT), including evasive threats that are not easily caught in real time?

-

How much advertiser spend is typically lost before your system flags or mitigates a new fraud tactic?

-

Are you currently involved in any litigation related to your research, methods, or findings?

-

How is advertiser data collected, handled, and stored—and is it ever shared with outside parties?

-

How often do you refresh your Known Bots and Known Data Center IP lists to ensure ongoing relevance?

-

How do you classify and manage bots like URLScan, and what specific steps do you take to prevent them from interfering with ad campaigns?

-

Since your founding, how many SIVT schemes have you directly uncovered, disclosed, or helped stop on behalf of clients? The industry?

An Expert in Fighting Fraud

An essential question in combatting fraud is: What expertise do you have on your team?

At DV, fraud detection and prevention is one of our core competencies. Since 2008, DV has been at the forefront of combatting new and emerging types of ad fraud, driving media quality and effectiveness for advertisers (optimize spend) and publishers (receive that spend). Our team of experts has an established track record of discovering the most sophisticated ad fraud schemes in the industry.

The DV Fraud Lab is our dedicated specialist team of data scientists, analysts, cybersecurity researchers, and developers. Together, we analyze billions of impressions every day, identifying comprehensive fraud and SIVT, from hijacked devices to bot fraud and injected ads. Our AI-backed deterministic methodology results in greater accuracy, wider coverage and, ultimately, superior protection for brands.

Experts in the Fraud Lab have been acknowledged by the professional community on numerous occasions for their innovative work, e.g. on international stages such as Open JS (see DV Fraud Lab talk about Stealthy Headless Browser Bots in 2022), RSA Security Conference (see ”When Traffic Is Spiking, How Do You Know It’s Legit?” in 2020), CyberWeek (see Roni Yungleson and Gilit Saporta on FraudCon Bots Panel, together with IBM Security and AppsFlyer in 2023), AI Week (see Yuval Rubin and Gilit Saporta on “The Bot that Liked Podcasts” in 2024).

As part of their passion and commitment to fraud fighting, DV Fraud Lab experts volunteer for many cross-organizational initiatives that benefit digital Trust & Safety. For example, Gilit Saporta, VP Product Management, Fraud & Quality, has co-authored a Fraud Fighting book (with author royalties donated directly to UNICEF) that was published by O’Reilly in 2022. The book covers best practices and code exercises gathered over 25 years of experience in fighting fraud, and some of the book’s content was also made publicly available via a free video series called “How to Measure Fraud Metrics” on FraudLab.com.

DV Fraud Lab members also serve in various leadership functions at the academic yearly FraudCon at Cyberweek, a leading international conference on cyber fraud. Moreover, many of the Fraud Lab members contribute frequently to their professional community, for example via the Fraud Fighters Meetups Series, where professionals mentor each other to help grow the next generation of experts in the space. The public side of DV’s Fraud Lab work is just the tip of the iceberg in fostering a better industry and sharing case-studies for the benefit of all fraud researchers (see Gal Badnani’s blogpost “Making- a Better Internet, Fighting One Fraud Scheme at a Time”).

At DV, honing excellence and expertise of the Fraud Lab plays a central role in protecting global brands from invalid traffic and sophisticated ad fraud schemes. Visit DV’s Transparency Center to find more in-depth work from established and respected Fraud Lab experts.

Understanding and Managing GIVT

GIVT is invalid traffic that can be identified using routine means of filtration, such as search engine crawlers, creative auditing bots, stress testing bots, AI scrapers, and other automated tools used for legitimate purposes. Unlike SIVT, which involves fraudulent activities like ad stacking or domain spoofing, GIVT typically lacks malicious intent. The IAB and MRC ensure robust industry standards and regulations are in place to protect advertisers from wasted ad spend. For a deeper understanding of 2024 GIVT trends, please refer to our blog post.

DV’s Pre-Bid Avoidance for IAB Known Bots

DV actively uses the TAG Data Center List, IAB Known Browsers, and IAB Spiders and Bots List, which are widely accepted as standardized resources for identifying GIVT. As a contributor and collaborator with the teams and Tech Lab of TAG and the IAB, DV helps enhance its accuracy and relevance, reflecting the latest trends in automated traffic.

IAB Known Browsers and IAB Spiders and Bots List are automatically digested by DV on a weekly basis. By using DV services, which automatically include all industry-standard resources, the vast majority of GIVT can be avoided. However, advertisers must also ensure that GIVT and SIVT blocking is activated where applicable. Not all campaigns are set up to block GIVT pre-bid, even when the option is available.

Additional Considerations for Advertisers

By using DV services, which automatically include all industry-standard resources, GIVT can be avoided. However, advertisers must also consider the following.

-

Ensure Proper Activation of Pre-Bid Avoidance: Actively review and optimize your campaign settings to ensure GIVT and SIVT blocking is activated where applicable. Not all campaigns are automatically set up to block GIVT pre-bid.

-

Understand Platform Limitations: Some media-buying platforms may not support pre-bid avoidance of GIVT. Platforms that support user agent lookups are better equipped to preemptively filter GIVT. Advertisers should inquire with their ad tech partners about these limitations and prioritize platforms that fully support pre-bid GIVT avoidance.

-

Address the GIVT Challenges Presented by Inclusion Lists: Some media-buying partners may rely on inclusion lists or post-bid measurement methods, which do not proactively address GIVT. While IAB and TAG lists are valuable, they don’t cover the entire spectrum of GIVT bots. DV’s proprietary GIVT identification and pre-bid capabilities provide an additional layer of protection.

DV Has You Covered

At DV, we offer a comprehensive suite of solutions to tackle GIVT both before and after the bidding process, ensuring advertisers maintain appropriate treatment and accurate reporting of both SIVT and GIVT. DV’s solutions are accredited by the MRC for pre-bid IVT detection on all of its integration partners and for post-bid GIVT and SIVT prevention and measurement on desktop, mobile web, mobile apps and CTV environments.

-

DV’s Proactive Approach to Ad Fraud: the DV Fraud Lab continuously explores and mitigates new and old techniques used by fraudsters. While fraudsters’ tactics are always evolving, our continuous updates and proactive measures ensure that we stay ahead of fraudulent activities and that any vulnerabilities are addressed promptly.

-

DV’s Consistent Efforts in Fraud Detection: DV has a strong track record of releasing findings on how fraudsters evade detection. In 2024 alone, we identified and addressed 50 variants of fraud schemes, demonstrating our capability to catch more fraud than any other vendor.

-

DV’s Commitment to GIVT Bot Filtration: DV is committed to GIVT bot filtration, including known crawlers and spiders, maintained automatically using the TAG Data Center List and IAB Known Browsers and IAB Spiders and Bots List, which are widely accepted as a standardized resource for identifying GIVT. We collaborate closely with the IAB team and contribute to the IAB lists when necessary.

Related Topics

Recent Posts