In May 2017, the IAB Tech Lab launched ads.txt, a simple, powerful solution to help prevent the sale of unauthorized ad inventory. With ads.txt, publishers can host a text file that transparently lists all companies authorized to sell their inventory, either directly or indirectly (i.e., resellers).

Programmatic platforms process these files to qualify the inventory they purchase. When they receive a bid request for an ad impression, they check the publisher’s domain against the ads.txt list. If the selling party (e.g., an SSP, exchange, or reseller) is not listed, the request is flagged as unauthorized, and the ad is either blocked (in strict ads.txt implementations) or marked as risky (for those who accept indirect sales).

Ads.txt has become a key standard for maintaining integrity in programmatic advertising, fostering greater transparency and trust in the value chain, and its success has led to extensions like app-ads.txt for mobile apps and streaming/CTV in 2019.

However, bad actors can circumvent ads.txt or leverage the standard to simulate legitimacy. For example, in late 2018, the DV Fraud Lab identified a network designed to generate high volumes of non-human traffic across hundreds of websites. In this scheme, bots scraped content from legitimate websites and created “spoofed” sites with URLs designed to appear original. These sites displayed the scraped content along with fraudulent ad slots. The scheme’s operators then sold these fraudulent ad slots — using the spoofed URLs — through authorized resellers listed on the legitimate publishers’ ads.txt files. This made the fraudulent ad inventory appear legitimate, as it seemed to originate from a valid site via an authorized seller. See more on the scheme here.

How Bad Actors Manipulate Ads.txt to Generate Fraudulent Ad Inventory

Here is a hypothetical example: Let’s say The New York Tribune (a mock publisher) has the following ads.txt file:

google.com, 12345, DIRECT

example-reseller.com, 67890, RESELLER

big-ad-network.com, 24680, RESELLER

Step 1: Scrape the real site.

The fraudsters use a bot that visits thenewyorktribune.com and scrapes its content — headlines, images and layout. It then recreates the site on a fake domain, say newyork-tribune-news.com, which looks nearly identical.

Step 2: Trick the system into believing it’s a real visit.

The bot sets up a controlled browsing environment:

- It makes network requests that appear to come from real users.

- It spoofs the Referer header to make it look like visits are happening on the legitimate The New York Tribune.

- It injects ads and simulates clicks or engagement metrics to make the activity seem legitimate.

Step 3: Gain access to a reseller.

The fraudsters apply for an account with example-reseller.com (a real, but less tightly controlled reseller).

- They pretend to be a legitimate publisher with “exclusive” traffic.

- The reseller doesn’t carefully vet every new publisher and approve their account.

Step 4: Sell fake ad slots.

- Now, the fraudsters sell ad space on their fake site through example-reseller.com, which is listed as an authorized reseller in The New York Tribune’s ads.txt file.

- Advertisers see “The New York Tribune” in their reports (since the URL is spoofed) and assume their ads ran on the real site.

Step 5: Profit without delivering real traffic.

- The fake site never had real human visitors — just bots.

- The fraudsters collect real ad revenue from brands who believe they’re paying for ads on a premium publisher.

- The advertiser gets zero real engagement, but the fraudsters still get paid.

This is how fraudsters bypass ads.txt protections and steal ad dollars while making the fraud seem legitimate on paper. Since the launch of ads.txt, DV has observed over 100 examples of this type of scheme, with a significant increase over the past five years.

Less Sophisticated, Just as Dangerous

While the scheme described above is sophisticated, exploiting ads.txt to defraud publishers and advertisers, we consistently observe less sophisticated, lower-effort fraud that warrants awareness in the ecosystem.

Fraud at the Front Door: Misleading Publishers

Fraud often enters through the front door. For example, fraudsters, posing as legitimate companies, may persuade publishers to add them as authorized resellers in their ads.txt files by promising “unique” technology or exclusive demand that will increase revenue. Publishers focused on maximizing yield and ensuring ad slots are filled may accept these claims in good faith but without full visibility into potential risks. Once listed in ads.txt, these resellers gain access to the publisher’s inventory, which they can sell or exploit for fraud.

Bloated Ads.txt Files: A Gateway for Fraud

More commonly, however, fraud spreads when publishers add new lines to their ads.txt files without removing outdated or incorrect entries, causing the files to become bloated. This can complicate verification and allow unauthorized sellers to linger in the ecosystem, increasing the risk of fraud.

The Risk of Lax Vetting Standards

Alternatively, fraud occurs when a legitimate reseller with lax vetting standards unknowingly enables bad actors to operate across its network, allowing fraud to scale. This issue has contributed to the rise of supply path optimization (SPO) in advertising — a strategy aimed at reducing the number of intermediaries to minimize fraud and waste. Fewer resellers mean fewer vulnerabilities, lowering the likelihood of fraudulent activity.

How Ads.txt Copying Amplifies the Problem

This problem is compounded in publisher networks, where ads.txt files are copied across multiple properties. Often, sites within a publisher network will share identical ads.txt files by design.

While this standardization streamlines operations, it also amplifies risk when a network potentially includes a “bad” reseller in its ads.txt file. That reseller is then automatically copied across other publishers in the network. Programmatic platforms then bulk ingest the ads.txt files for efficiency. While this speeds up processes, it also allows fraudulent sellers to spread unchecked. A single bad actor listed in one ads.txt file can quickly appear in many others, making fraud more widespread, harder to detect and even more difficult to eliminate.

Synthetic Echo Takes Advantage of Ads.txt

The limitations of ads.txt are evident in Synthetic Echo, a network of over 200 AI-generated, ad-supported websites recently uncovered by the DV Fraud Lab.

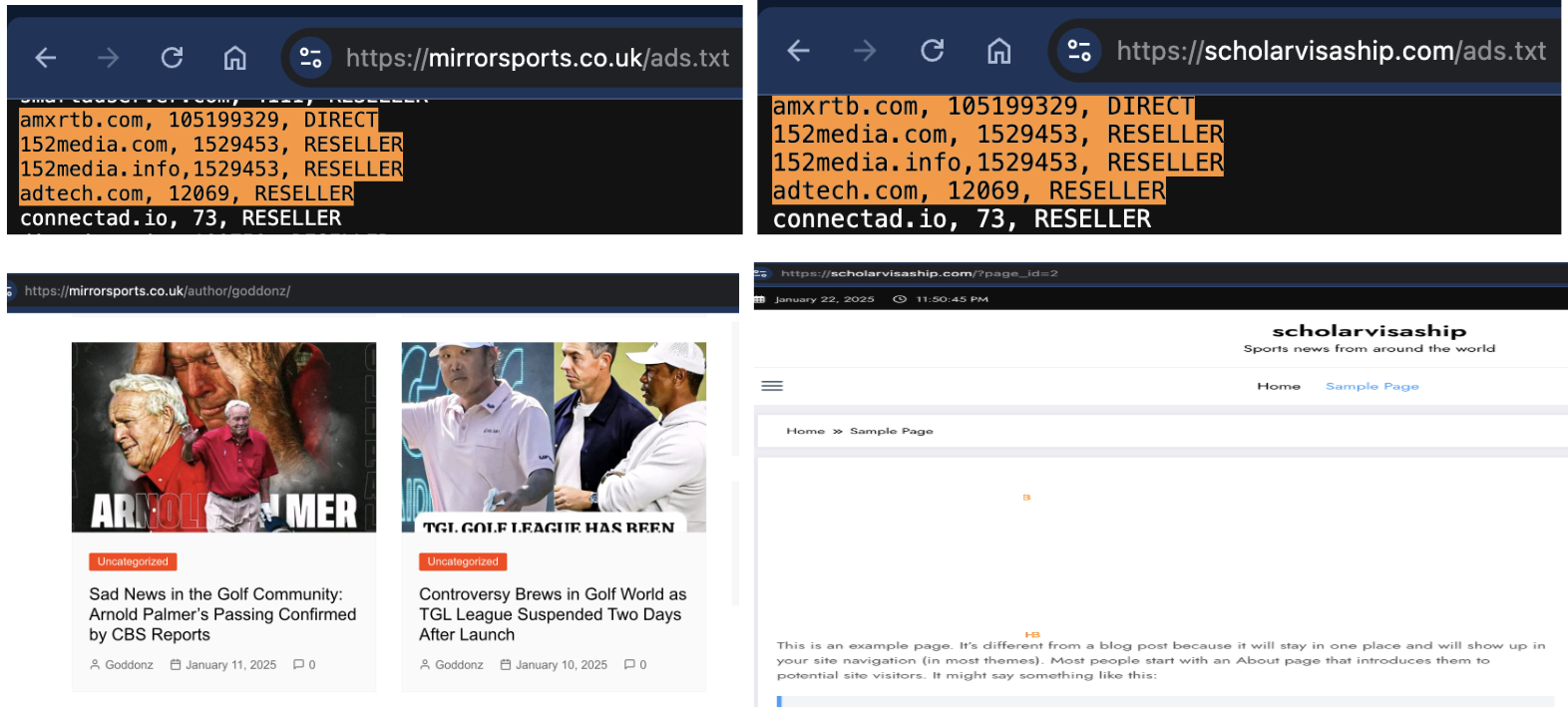

Monetized through multiple SSPs and exchanges, Synthetic Echo exists solely to churn out low-quality, AI-generated content and capture ad revenue. The network also employs deceptive domain names — such as espn24.co.uk, nbcsportz.com, nbcsport.co.uk, cbsnewz.com, cbsnews2.com, bbcsportss.co.uk, 247bbcnews.com, foxnigeria.com.ng and more — to mislead programmatic platforms and buyers into mistaking them for legitimate publishers.

Ads.txt Manipulation

Extending the findings of our January report on Synthetic Echo, a new follow-up DV analysis, shared here, shows the deception now includes ads.txt files.

An examination of Synthetic Echo sites found near-identical ads.txt files replicated across multiple properties. In fact, DV investigators uncovered additional fraudulent sites simply by identifying repeated ads.txt files, highlighting how fraudsters manipulate this system to scale their schemes.

Ads.txt Plagiarism

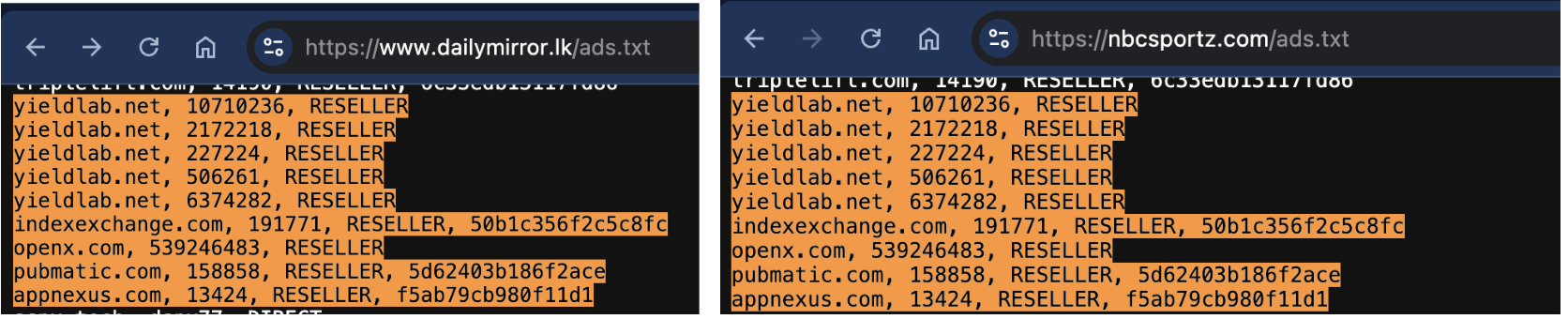

More concerning, however, is that many Synthetic Echo sites have copied ads.txt entries directly from reputable publishers. For example, the ads.txt file for NBCSportz.com lifted sections from trusted media, like CNN.com and Daily Mirror Sri Lanka.

This kind of plagiarism doesn’t imply vulnerabilities or wrongdoing by reputable publishers, but it underscores why programmatic platforms must remain vigilant to detect and block deceptive copying.

https://www.dailymirror.lk/ads.txt vs https://nbcsportz.com/ads.txt

https://edition.cnn.com/ads.txt vs https://nbcsportz.com/ads.txt

Another site in the Synthetic Echo network, breezysports.co.uk, shares large sections of ads.txt entries with Cracked.com. While both sites rely on ads, the editorial quality and design between them are vastly different. Again, Synthetic Echo sites have copied ads.txt entries directly from reputable publishers (Cracked.com, in this case).

By plagiarizing ads.txt files, Synthetic Echo engages in a sophisticated, multi-layered form of “programmatic impersonation” designed to deceive programmatic platforms. The AI-generated network of sites doesn’t just use URLs and domain names that closely resemble well-known publishers, they also copy ads.txt entries to make it seem as though they are trusted by major ad platforms.

By exploiting these trust signals, fraudsters trick SSPs and DSPs into recognizing them as part of a legitimate network. As a result, advertisers unknowingly buy inventory from these sites, diverting ad spend away from legitimate publishers and exacerbating waste in the ecosystem.

Why Maintaining a “Tight” Ads.txt File Matters

Responsible publishers prioritize a clean, well-maintained ads.txt file with only trusted sellers to minimize fraud risk and protect their brand reputation. They actively audit their files, share best practices and emphasize regular reviews while limiting unnecessary resellers.

Based on DV’s findings and recommendations regarding fraud that exploits ads.txt vulnerabilities, here are best practices for both publishers and programmatic platforms to enhance security and transparency in the ad supply chain.

Best Practices for Publishers

- Limit ads.txt entries to trusted partners.

-

-

- Only add direct sellers or verified resellers with clear, contractual relationships.

- Ensure ad networks and resellers only offer inventory through direct relationships with your organization.

-

- Monitor domains and code execution closely.

-

-

- Continuously track all domains and scripts running in your digital environment.

- Restrict authorized domains and block any unauthorized ones.

-

- Keep ads.txt files concise and focused.

-

-

- Prioritize direct relationships with premium partners.

- Minimize resellers, as excessive listings create opportunities for fraud.

-

- Regularly audit and validate ads.txt files.

-

-

- Check for syntax errors and unexpected changes.

- Use validation tools to ensure the file remains error-free and up-to-date.

-

- Use ads.txt alongside Sellers.json for extra security.

-

-

- Sellers.json provides additional transparency into who is selling inventory.

- Consider working with independent ad fraud vendors for additional verification.

-

- Recognize that ads.txt is not a complete failsafe.

-

- Stay vigilant for sophisticated fraud schemes that attempt to bypass ads.txt protections.

Best Practices for Programmatic Platforms (SSPs, DSPs, Ad Exchanges)

- Verify ads.txt integrity before ingestion.

-

-

- Cross-check entries for duplicate or suspicious listings.

- Flag unexpected resellers or excessive intermediaries.

-

- Detect and block copied or manipulated ads.txt files.

-

-

- Identify cloned ads.txt files across multiple domains, a common fraud signal.

- Monitor domain reputation and investigate anomalies.

-

- Prioritize direct sellers and minimize reliance on resellers.

-

-

- Encourage advertisers to choose direct-seller inventory where possible.

- Assign lower priority or stricter vetting to indirect reseller traffic.

-

- Use Sellers.json and OpenRTB SupplyChain Object for deeper validation.

-

-

- Require Sellers.json alignment with ads.txt entries.

- Analyze SupplyChain Object data to confirm that impressions flow through legitimate paths.

-

- Flag and remove bad actors quickly.

-

-

- Maintain a real-time fraud detection system to identify and exclude fraudulent sellers.

- Share threat intelligence with industry groups and partners to prevent fraudsters from reappearing under new domains.

-

- Regularly audit inventory sources.

-

- Conduct ongoing investigations into high-risk inventory sources.

- Educate advertisers and publishers on emerging fraud tactics.

By following these best practices, publishers can reduce their exposure to fraud, and programmatic platforms can prevent fraudulent inventory from infiltrating the ecosystem. A tight, well-maintained ads.txt strategy benefits everyone — protecting ad spend, ensuring transparency and maintaining a cleaner, more trustworthy programmatic marketplace.

This piece is part of DV’s Transparency Center, a dedicated portal designed to educate the industry about DV technology and measurement. By providing detailed explanations, insights and timely statements on key issues, we aim to foster trust and transparency within the digital advertising ecosystem.